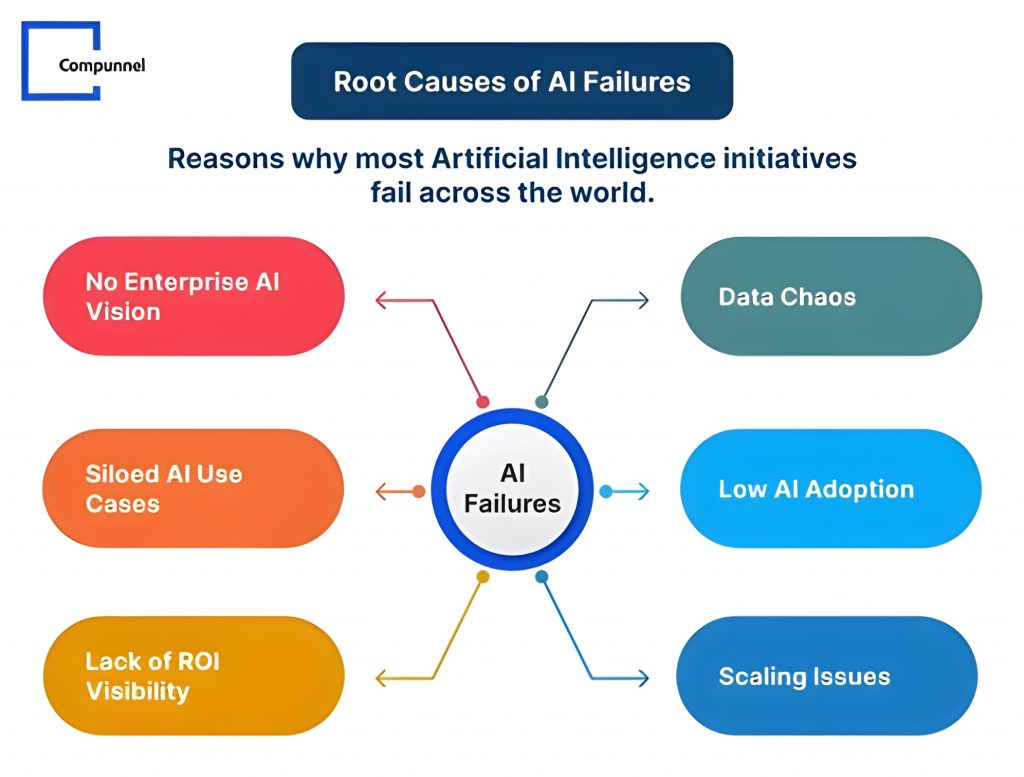

Why Most AI Projects in Kenya Fail Before They Start

Artificial Intelligence is no longer experimental. In Kenya, however, most AI projects never reach production—or fail quietly after deployment. This is not due to a lack of ambition or interest. It is because AI initiatives are often started for the wrong reasons, with the wrong assumptions, and without the structural foundations required for success.

This article explains why most AI projects in Kenya fail before they start, and what serious organizations must do differently.

1. AI Is Treated as a Tool, Not a System

The most common failure point is conceptual.

AI is often approached as:

- A feature to add

- A model to integrate

- A quick win to demonstrate innovation

In reality, AI is a system-level capability. It depends on data pipelines, governance, infrastructure, monitoring, and operational ownership. When organizations attempt to “add AI” without redesigning the surrounding system, failure is inevitable.

Successful AI initiatives start with systems thinking, not tools.

2. Poor Data Readiness Is Ignored

AI systems are only as reliable as the data they consume. In many Kenyan organizations:

- Data is fragmented across departments

- Records are incomplete or inconsistent

- There is no clear data ownership

- Historical data was never designed for analytics

Despite this, teams proceed directly to model selection.

This creates a predictable outcome:

models that appear impressive in demos but collapse in real-world usage.

Data readiness is not optional. It is the first gate—not a later fix.

3. Infrastructure Realities Are Underestimated

Many AI projects are designed as if they will run in ideal conditions:

- Always-on connectivity

- Predictable traffic

- Clean integrations

- Unlimited compute

Kenya’s operating environment is different. Power instability, variable connectivity, cost-sensitive infrastructure, and complex payment ecosystems must be designed for from day one.

AI systems that ignore these realities rarely survive beyond pilots.

4. Governance and Risk Are Afterthoughts

AI introduces new forms of risk:

- Biased decision-making

- Regulatory exposure

- Explainability challenges

- Operational accountability

In regulated sectors—finance, SACCOs, healthcare, public services—these risks are not theoretical. Yet governance frameworks are often considered “later concerns.”

By the time risks are addressed, the system is already unviable.

Governance is not a compliance exercise. It is a design requirement.

5. Vendors Are Chosen Instead of Partners

Many organizations select AI providers based on:

- Price

- Speed

- Promises

This leads to transactional relationships where:

- The vendor owns the system logic

- Knowledge is not transferred

- Long-term maintenance is unclear

- Failure responsibility is ambiguous

AI initiatives require partners who think in terms of ownership, longevity, and accountability, not delivery milestones.

What Successful AI Projects Do Differently

Across successful deployments, the pattern is consistent:

- AI is tied to a specific operational problem

- Data readiness is validated before modeling

- Infrastructure constraints are designed around

- Governance is embedded early

- The AI system has a clear internal owner

Most importantly, success is measured by operational impact, not technical sophistication.

Final Thought

AI failure in Kenya is rarely about intelligence or ambition. It is about starting without foundations.

Organizations that treat AI as a strategic system—rather than a technical experiment—are the ones that succeed.